AI and Financial Assets are changing the game. Learn how AI integration can optimize asset management, boost decision-making, and improve financial outcomes

This machine learning prediction system is the product of 7 generations of work towards a commercial result in collaboration with Cornell’s Masters of Applied Statistics. We have been working with Cornell now coming into our 11th year and for many such years we have worked on machine learning predictions and made many failed attempts in our journey.

Paradoxically, in such endeavors, failure is often disguised as success. The healthy skepticism of a (slightly) grey-haired professional investor has been an invaluable lens to lead A.I projects. Sober fiduciary ethos and risk-minded thinking keep these small failures far removed from client accounts and isolated to the R&D environment of tools like AWS SageMaker and Juypter Notebooks. We also like to rely on heavy data visualization to help validate / invalidate batch results.

We have had our successes too and below we outline the schematic of our A.I predictions, having assimilated many of our successes and avoiding those pitfalls encountered along the journey. We shall keep the technical jargon out of it as our intended audience is sophisticated investors.

As all the students have likely grown tired of hearing, “An A.I. Stock Market prediction if nothing if not first trustworthy.”

The prediction system is built using a hierarchical composition of various prediction systems. In this way, the machine learning predictions mimics a prediction scheme that futurist and author Ray Kurzweil talks about in his book, “How to Build a Mind.” That book is largely concerned with the building of a general intelligence. Kurzweil draws strong parallels between successful machine learning or artificial intelligence and the way the human brain works in a hierarchical manner giving rise to the neocortex capable of abstract and creative thought.

Another analogy is that of weather forecasting. Now, weather forecasting represents the composite of tens of thousands of barometric, wind, humidity and temperature readings all over the globe, especially when combined with centuries of recorded weather for any given date, meteorologists can now forecast the weather better than any time in our history. To forecast the weather in Poughkeepsie you don’t forecast the same way as you would for San Antonio, TX. Likewise, the forecast for July 25th is quite different than the forecast for December 25th.

So it is with predictions for stocks. In order to create a globally efficacious prediction model, we believe that you can build it up from successful smaller models. But we knew we had to have the individual quirks or each stock,

Stock market predictions will never see the type of success as weather forecasting. We must recognize our fallibility. Stock prices are an emergent property of a many layered large scale socio-economic systems. Note quite chaotic, but much more chaotic that weather. Recall Benjamin Martin’s shooting advice in the movie, “The Patriot”, “Aim small, miss small.”

If your expectation for machine learning forecasts is to get rich quick, you will see the usual result. If your expectation is to consistently add a few incremental percentage points of return to a prudent investment process, then you are in the right place.

With our extensive background in portfolio optimization, generating expected returns for investment candidates is a paramount input. For many years, our various software platforms including Portfolio Thinktank, Gravity Investments, and everything we do at www.gsphere.net have built portfolios where the expected returns are produced for any stock, fund, currency or asset based on a multi-sampling expert system. That is to say we are using historical performance to predict the future by more optimally selecting (and weighing) multiple historical periods previously observed to offer a predictive advantage.

This technique has served investors well and has been demonstrated to offer a predictive advantage as measured by an in-sample, out-of-sample correlation of approximately 20% – 30%, depending on the assets and time horizon. This means that if the market delivers a 10% APR then we expect to deliver an extra 2.5% return.

More than half of this comes from the momentum signal. Momentum is regularly observed to be the factor offering the greatest return and is a nice bed fellow within a diversification portfolio strategy. For a nice chart on factor performance see; factor investing

Our success in these quantitative forecasting methods has served as a hurdle rate that any machine learning prediction must outperform for it to serve customers better than our existing systems.

Also, having built perhaps the best portfolio back-testing engine in the industry, we have been zealous about biases and proper controls processes to ensure the utmost integrity of results.

These two traits served as both barriers and stepping stones enabling Portfolio ThinkTank to take the long view in designing our stock market prediction system: Like what we have built for portfolio optimization and analysis, we set to build a prediction system that we expect to use for the next decade or two.

The first thing we did was decide that each stock should have its prediction model. This means that the data used to predict each stock is uniquely trained to the specific dynamics of that stock.

The prediction for every stock is a composite of three separate prediction systems. A prediction for the stock market, a prediction for the sector, and a prediction model for the stock’s alpha: Alpha is the return that a stock generates independent of the stock market influence.

The logic follows from straightforward investment thinking. Building on the Nobel prize-winning work of William Sharpe and the

Capital Asset Pricing Model (CAPM): each stock prediction follows a basic regression equation:

Where:

= Expected Return of Investment

= Expected Stock Market Return

= Risk-free rate of return (yes, Risk-free is a fallacy, but it's not material so bear with me)

= Beta of the investment

Assuming that the risk-free assets offer no effective real return (after inflation) we can simplify the equation to:

This is called a single-factor model. We use a 2-factor model with both the stock market and the sector's return. These factors strongly explain asset performance, so predicting them well can yield a tremendous advantage. Accordingly, our process takes the form:

In this model, each stock has its own predicted machine learning model Alpha. Then it shares the predictions

for the sectors and market using its own unique observed Betas.

That asset’s alpha is computed primarily from information on the company’s financial reports. We use about 70 data points from the financial statements and then expand those 70 data elements across ratios and multiple time scales to create about 500 data features for every stock. The deep learning machine learning algorithms find the most predictive combinations of these features and discards the data features with no explanatory power.

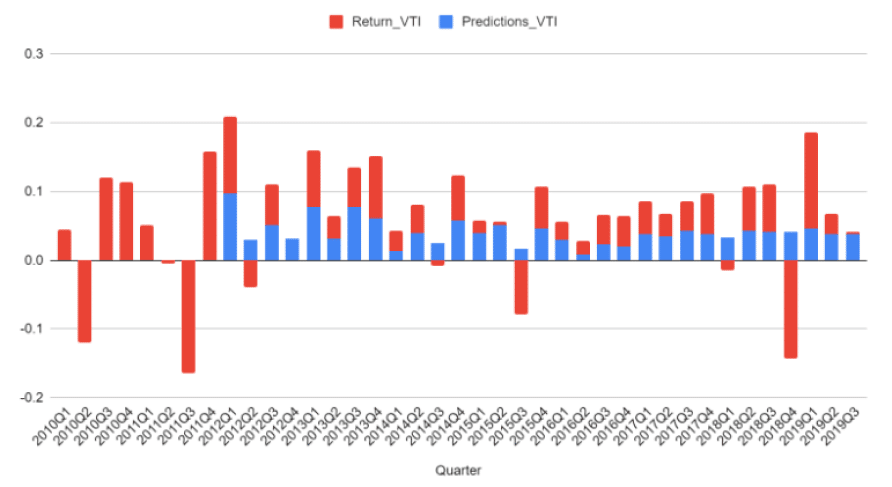

In addition to a market forecast, we are predicting the return from both the stocks’ sector beta, and the stocks’ market beta as the two combined offer much more explanatory power than a single factor model. These market and sector A.I Predictions are powered by a cadre of economic data and performance data of key indices.

The chart below provides the results of the meta model’s annual return predictions for 1926 U.S. stock exchange-listed stocks having sufficient history and data to qualify for the model. 1926 represents the subset of the Russell 3000 Stock Market Index that we had sufficient financial statement history at the time of the research in Spring 2022. Please reach out for the latest tests results.

The tests cover the 1926 stocks from 2014 through 2021 with each stock’s predicted return correlated against the actual return produced for the same prediction period. Each asset is correlated against its quarterly predictions and gives one observation to the histogram below. A correlation of zero would suggest that predictions were random and a correlation of 1 suggests perfect clairvoyance.

Considerable and assiduous efforts are taken to ensure bias mitigation. This includes walking forward out of sample validation, separation of models into training testing and validation, a careful review of feature data, sensible governance of feature data and selection, and perhaps especially, selection of bias-minimizing objective functions.

For further interpretation, you can conclude that any value with a negative correlation is a bad prediction. While a full 10% of our predictions could classify as a bad prediction we can also see that only 1.5% would count as a really bad prediction, correlating less than -.25. It follows then that our directional accuracy is 90%.

The hard part of conducting such a test is not in producing an attractive result, but in ensuring that any returns produced can be trusted.

The elimination of biases is not easy. This is the downfall of most machine learning projects.

Here, follows a year-by-year examination of the results of the tests, followed by an aggregate.

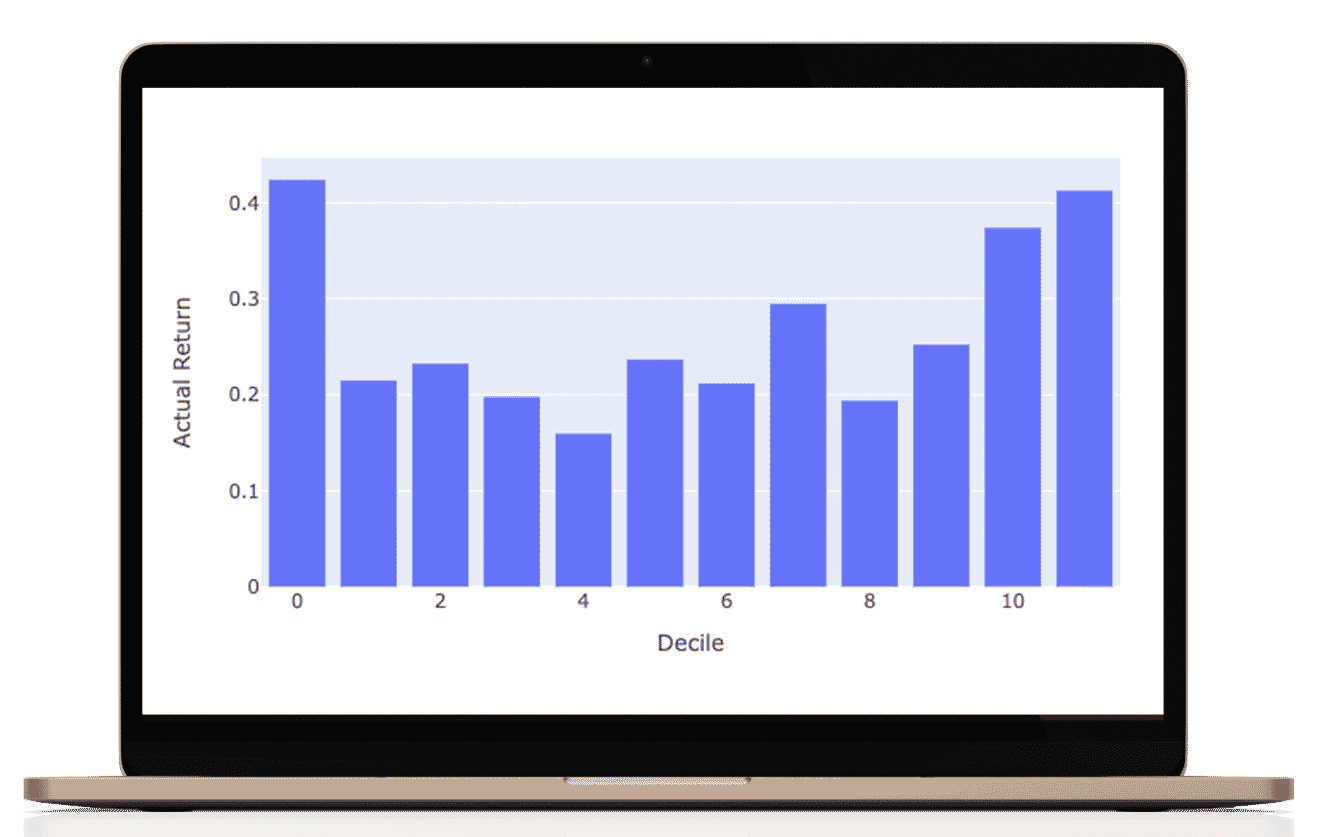

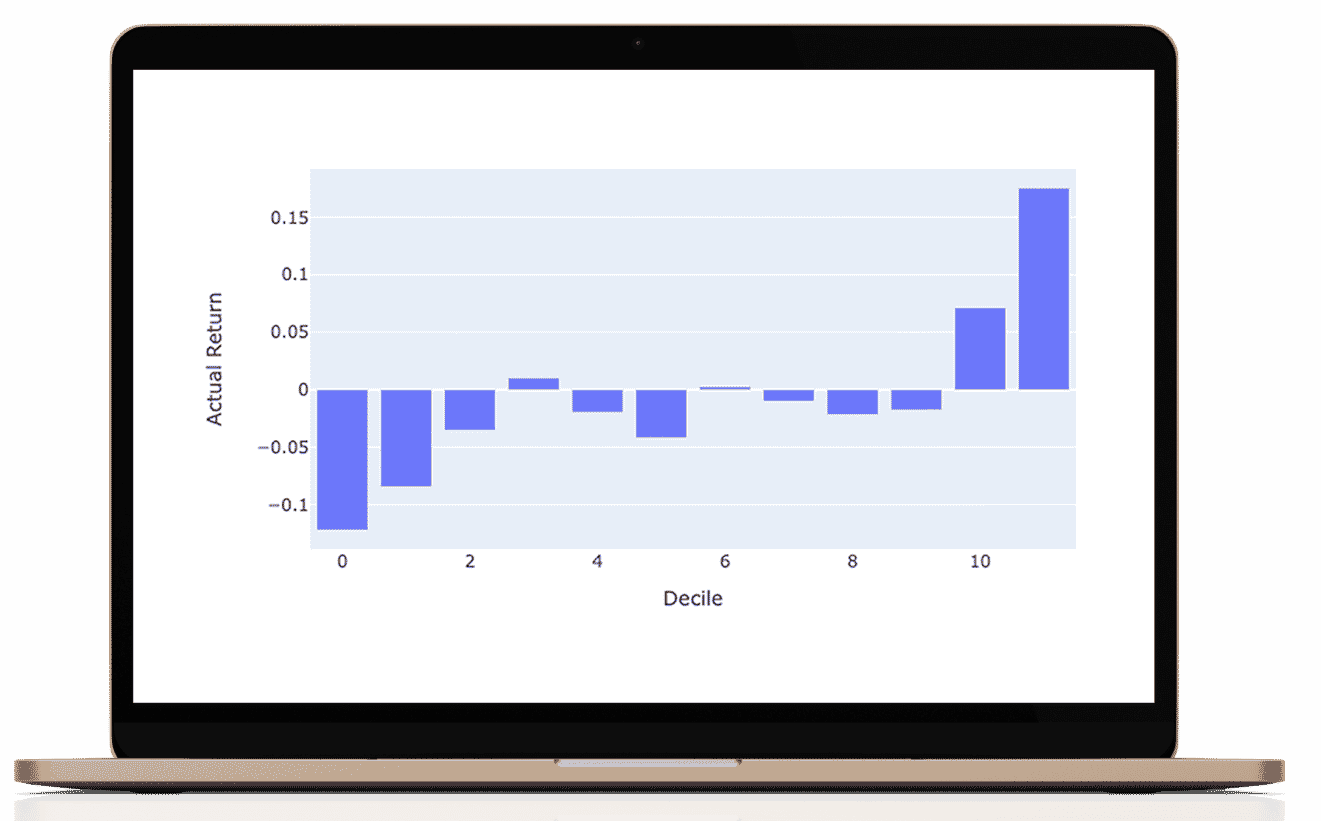

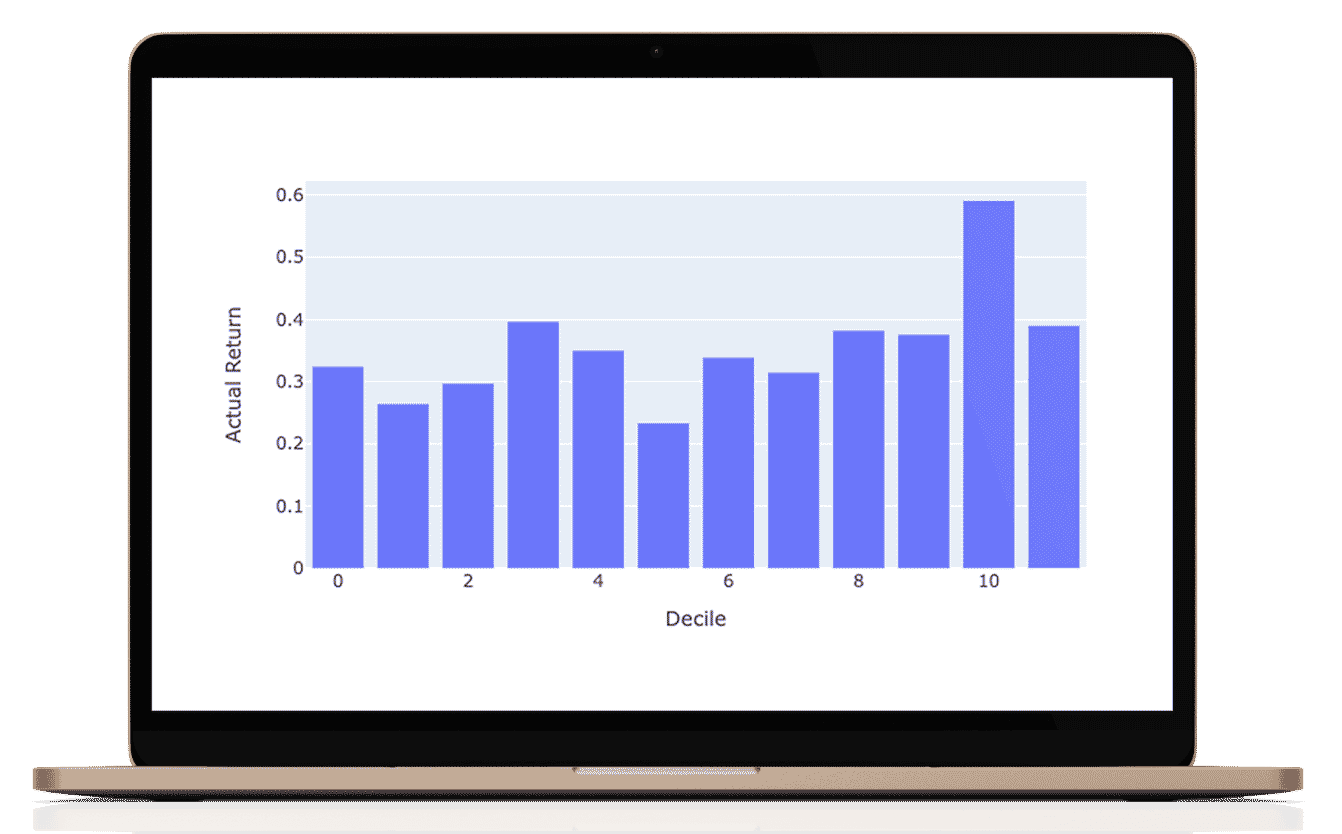

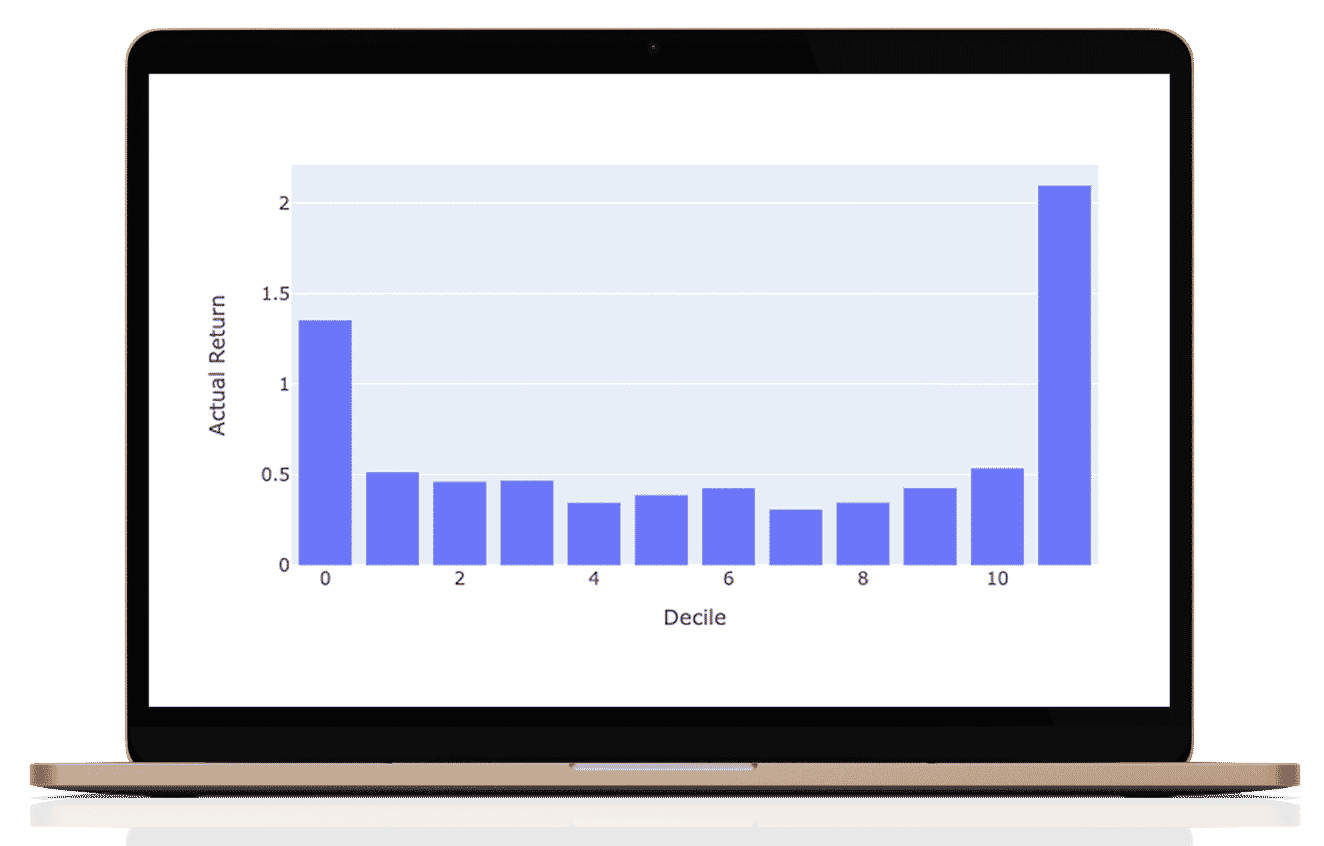

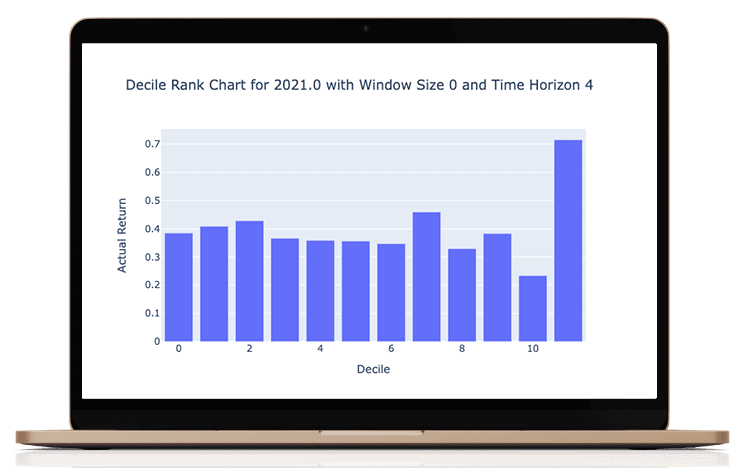

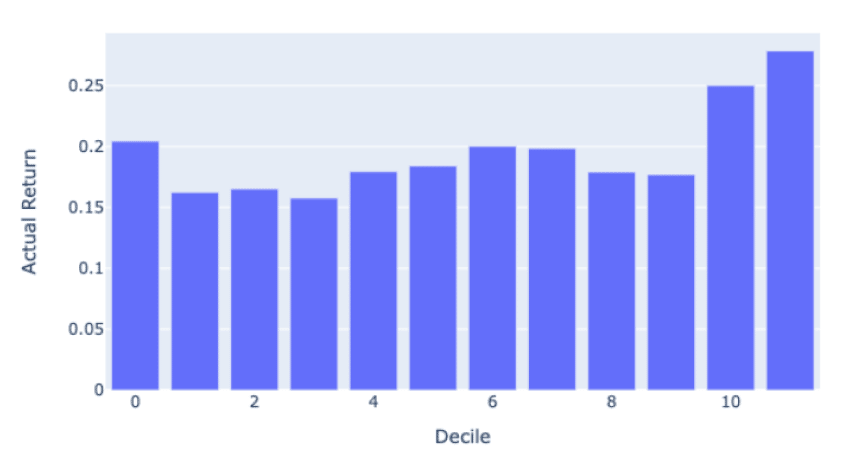

What I love about this manner of performance evaluation is that it is easy to understand in investment terms the success of the results. Do the actual returns grow steadily? To make these graphs we classify all of our predictions into 8 octiles (labeled as deciles). The rightmost octile (bar) consists of ⅛ of the 1926 stocks with the highest predicted return for that period and the leftmost octile with the lowest predicted return. If then, the actual returns that are graphed conform to the shape then we know we are adding predictive value.

As you can see, the results are not perfect (never trust perfect AI-based stock predictions!) but are good enough to obtain a realistic and persistent performance advantage.

We tested a few variations around the Time Horizon. In these charts, Time Horizon 4 means 4 quarters or a 1-year prediction. Our results were comparable across multiple time horizons, which we have configured as an input variable for generating the predictions. Window size refers to the amount of history data we use. Window size = 0 means we use all the data available preceding the date of the prediction. We observed that the more data we used, the better the results; this is common in machine-learning applications.

For this period, the S&P 500 produced an annual return of 14.75% and the S&P 1500 produced an annual return of 14.50%.

Portfolios selected from our top decile over the same period would average a return of 28%.

Accordingly, one could judge the performance of the model by buying the top decile and shorting the bottom decile. This long/short, market-neutral portfolio strategy would yield a nearly 8 % return.

Scroll the charts below to explore the performance of the sector models. for the sectors, we used a regression of the best-fit sector irrespective of SIC or S&P classification.

The future of A.I stock prediction is bright. It is also moving fast. Stock market predictions and LLM’s have a lot in common. So much of the developments around LLM’s can be recycled for stock prediction. More data. More features, more models, more results, more feedback, more learning. This is a machine-learning flywheel. As we continue to build out the model, we expect results to improve. We also expect more competition. We also expect that a considerable double barrier to adoption will create excess returns for the earlier adopters. barrier # 1; Baby boomers have thrived on simple, passive index investing. I suspect that some will never consider changing, even in the face clear evidence supporting a better approach. #2 The clear evidence itself. The evidence is slow to accumulate and even slower to disseminate. This gives a classic information asymmetry. You have plenty of time to get on the right side of this. Portfolio ThinkTank is working to shift the line of symmetry from the likes of DE Shaw, Two Sigma and Rentech to investors and advisors everywhere. The ones smart enough to look for the evidence and brave enough to act.

However, eventually the world will change and investors will not be content to merely own the stock market in a low cost index fund. Investors will want portfolios, not just custom tailored, but customized, parameterized, personalized and optimized from a Nth degree of granularity depending on how interested, educated and sophisticated an investor they are.

We believe that the combination of stock-specific ML model formation set within the CAPM prediction architecture provides a real opportunity for the predictions to help in delivering better performance for investors across assets, economic conditions, and time.

You can use our predictions now in our latest portfolio optimization software. You can also take our prediction model and make it your own with customizations. See https://advisors.portfoliothinktank.com/product/custom-pre-trained-deep-learning-predictions/ to learn more about how we can customize an A.I model to invest like you want.

James Damschroder

Special thanks to Chenyue Zhang, Bingshen Lu and Marissa Rubb for their project contributions and talents. Also, thanks to Professor Thomas Diciccio, Academic Advisor and the MPS faculty led by Xiaolong Yang, Ph.D., Sr. Lecturer, Sr. Associate Director, MPS Program. Special thanks to all of the data science students having made contributions over the years.

Asset Allocation Software for Advisors At Portfolio ThinkTank, our commitment to diversity extends beyond portfolios. We foster a culture that proactively embraces diversity, shields our ecosystem from fear and hate, and celebrates the beauty of our differences.

Quick links

Importance links